本文将试验在虚拟机上用CentOS8.1系统搭建典型的K8s集群(1Master + 3Worker),K8s版本1.28.2

以下内容如没有特别说明,意味着所有参与集群的服务器都要配置。

1. 环境准备

本次搭建用了三台vmware虚拟机,操作系统全部安装centos8.1

1

2

3

4

5

6

7

8

|

# uname -a

Linux k8smaster1 4.18.0-147.el8.x86_64 #1 SMP Wed Dec 4 21:51:45 UTC 2019 x86_64 GNU/Linux

# 修改三台机器的 /etc/hosts

10.10.213.1 k8smaster1

10.10.213.11 k8snode11

10.10.213.12 k8snode12

10.10.213.13 k8snode13

|

系统默认有很多源,我们都删掉,以后需要就逐个添加国内的源方便下载包。删完之后首先加的第一个是系统源

1

2

3

4

5

6

|

cd /etc/yum.repos.d

rm -rf *

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

yum -y install epel-release # 很多包都找不到,需要启动EPEL源

yum makecache # 重建源上所有的缓存

|

用chrony同步时间

1

2

3

4

5

6

7

|

yum install -y chrony

sed -i -e '/^server/s/^/#/' -e '1a server ntp.aliyun.com iburst' /etc/chrony.conf

systemctl start chronyd.service

systemctl enable chronyd.service

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0 # 保存当前时间

timedatectl

|

关闭Swap

- 暂时关闭 使用命令

sudo swapoff -a,但是重启之后会生效,这会导致k8s无法正常运行。

- 永久关闭,

vi /etc/fstab将有swap 那行注释掉,保存即可。

1

2

3

4

|

# 如果普通方式无法禁用 swap ,就用下面这句

systemctl mask dev-sdXX.swap

# 取消禁用可以

systemctl unmask dev-sdXX.swap

|

swap是啥?它是系统的交换分区,就是windows上常说的虚拟内存。当系统内存不足的时候,会将一部分硬盘空间虚拟成内存使用。那为什么K8S需要将其关掉呢?其实是为了性能考虑,因为虚拟内存慢很多,K8S希望所有的服务都不应该超过集群或节点CPU和内存的限制。

接下来要关闭系统防火墙、SElinux等,限制太多问题就变复杂了;这是常规操作,不细说。

安装Docker

参考以前的安装介绍:/2020/11/30113656-docker-cmd.html

1

2

3

4

5

6

7

8

9

10

11

12

13

|

# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://h8viyefd.mirror.aliyuncs.com"],

"insecure-registries":["10.10.200.11:5000"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-level": "warn",

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "5"

}

}

# 这里"insecure-registries":["10.10.200.11:5000"]一定要加上,否则无法认证局域网的仓库

|

PS: 2024-09-16 补充说明

在 Kubernetes v1.24 及更早版本中,我们使用docker作为容器引擎在k8s上使用时,依赖一个dockershim的内置k8s组件;k8s v1.24发行版中将dockershim组件给移除了;取而代之的就是cri-dockerd(当然还有其它容器接口);简单讲CRI就是容器运行时接口(Container Runtime Interface,CRI),也就是说cri-dockerd就是以docker作为容器引擎而提供的容器运行时接口;即我们想要用docker作为k8s的容器运行引擎,我们需要先部署好cri-dockerd;用cri-dockerd来与kubelet交互,然后再由cri-dockerd和docker api交互,使我们在k8s能够正常使用docker作为容器引擎。

自己上github下载相应的版本安装即可:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# 下载cri-dockerd安装包

wget https://github.com/Mirantis/cri-dockerd/releases/download/xxx.x86_64.rpm

wget https://github.com/Mirantis/cri-dockerd/releases/download/ \

v0.3.14/cri-dockerd-0.3.14-3.el8.x86_64.rpm

# 安装cri-dockerd

rpm -ivh cri-dockerd-0.3.14-3.el8.x86_64.rpm

# 可能会出现glibc版本的问题,先查看本机当前版本:

ldd --version

# 手动升级,最好别这么做,glibc是核心库,风险太大

wget https://mirrors.tuna.tsinghua.edu.cn/gnu/glibc/glibc-2.40.tar.gz

# 启动cri-dockerd

systemctl daemon-reload

systemctl enable cri-docker && systemctl start cri-docker

|

配置内核参数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

# 配置sysctl内核参数(这可以不要,直接从下一个配置开始即可)

$ cat > /etc/sysctl.conf <<EOF

vm.max_map_count=262144

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl -p

# 将桥接的 IPv4 流量传递到 iptables

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 生效配置

sysctl --system

# 开启 ipvs

yum install -y nfs-utils ipset ipvsadm # 安装ipvs支持

# 配置

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

# 执行1条配置,1条检查

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

# 加载 br_netfilter 模块

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

modprobe overlay && modprobe br_netfilter

# 查看是否加载

[root@nsk8s210 home]# lsmod | grep br_netfilter

br_netfilter 24576 0

bridge 192512 1 br_netfilter

|

服务器互信(非必须)

1

2

3

4

5

6

7

8

9

10

|

# 配置ssh互信,那么节点之间就能无密访问,方便日后执行自动化部署

ssh-keygen # 每台机器执行这个命令, 一路回车即可

cd /root/.ssh/

mv id_rsa.pub id_rsa.pub.11

scp -P xxx ip.pub.11 root@192.168.139.11:/root/.ssh/

scp -P xxx ip.pub.11 root@192.168.139.12:/root/.ssh/

# 每台节点执行

cd /root/.ssh/

cat id_rsa.pub.11 >> authorized_keys

|

安装 kubeadm kubelet kubectl

所有节点都需要安装这三个工具,k8s的官方源国内无法访问,找万能的阿里爸爸

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# cd /etc/yum.repos.d

# vi k8s.repo

# 加入下面的几行,保存退出

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# 最好重建一次源缓存,防止找不到k8s中的包

yum clean all

yum makecache

|

可以先查看下是否能找到相应的包,有的话直接安装

1

2

3

4

|

yum list |grep kubeadm # 查看是否存在

yum install -y kubeadm # 这一个命令就会安装kubeadm,kubelet,kubectl三个组件的最新版本

# 但是版本不一致常常出现些问题,最好还是指定版本

yum install -y kubelet-1.28.2 kubeadm-1.28.2 kubectl-1.28.2 --disableexcludes=kubernetes

|

安装完后发现我的版本是1.19.4-0,已经比较新了。而且上面一个命令很多包都一起装上了。

1

2

3

4

5

6

7

|

# 如果前面对docker的配置加入了下面这句,那么kubelet的相应配置也要修改

# "exec-opts": ["native.cgroupdriver=systemd"],

[root@k8smaster11 k8s]# vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

# 先设置成开机自动启动即可,不用现在就启动服务

systemctl enable kubelet.service

|

到此我们必须确保所有服务器都成功安装了kubeadm,kubelet,kubectl这三个组件,不需要启动kubelet.service服务,想启动此时也是启动不了的。

2. Master配置

主从服务器一定要能上网,或者实现用网关代理上网也可以,否则出现意想不到的错误,切记!切记!

可能只是需要有一个共同的网关就行,不一定非要上网。

在Master上安装必要的K8s程序,当然是以docker的方式提供的

1

2

3

4

5

6

7

8

9

|

# 注意下面的版本号随时发生变化,最后kubeadm init --kubernetes-version=1.28.13对应即可

[root@k8smaster1 bmc]# kubeadm config images list

registry.k8s.io/kube-apiserver:v1.28.13

registry.k8s.io/kube-controller-manager:v1.28.13

registry.k8s.io/kube-scheduler:v1.28.13

registry.k8s.io/kube-proxy:v1.28.13

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.9-0

registry.k8s.io/coredns/coredns:v1.10.1

|

同样需要找阿里云的资源,这里做一个批处理的脚本文件docker_master.sh

1

2

3

|

mkdir -p /root/k8s/ # 新建一个临时目录,存放临时文件

vi docker_master.sh # 贴入下面的脚本

# 可能机房服务器没有外网,需要从内部仓库拉取所需镜像

|

#!/bin/bash

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.13

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.13 \

registry.k8s.io/kube-apiserver:v1.28.13

docker rmi registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.13

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.13

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.13 \

registry.k8s.io/kube-controller-manager:v1.28.13

docker rmi registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.13

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.13

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.13 \

registry.k8s.io/kube-scheduler:v1.28.13

docker rmi registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.13

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.28.13

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.28.13 \

registry.k8s.io/kube-proxy:v1.28.13

docker rmi registry.aliyuncs.com/google_containers/kube-proxy:v1.28.13

docker pull registry.aliyuncs.com/google_containers/pause:3.9

docker tag registry.aliyuncs.com/google_containers/pause:3.9 registry.k8s.io/pause:3.9

docker rmi registry.aliyuncs.com/google_containers/pause:3.9

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.9-0

docker tag registry.aliyuncs.com/google_containers/etcd:3.5.9-0 registry.k8s.io/etcd:3.5.9-0

docker rmi registry.aliyuncs.com/google_containers/etcd:3.5.9-0

docker pull registry.aliyuncs.com/google_containers/coredns:v1.10.1

docker tag registry.aliyuncs.com/google_containers/coredns:v1.10.1 \

registry.k8s.io/coredns/coredns:v1.8.0

docker rmi registry.aliyuncs.com/google_containers/coredns:v1.10.1

如果当前服务器没有外网(应该没有外网才对),可以借助内网中docker仓库做中转,可以先拉取镜像,参考如下:

docker pull 10.10.200.11:5000/kube-apiserver:v1.28.13

docker pull 10.10.200.11:5000/kube-controller-manager:v1.28.13

docker pull 10.10.200.11:5000/kube-scheduler:v1.28.13

docker pull 10.10.200.11:5000/kube-proxy:v1.28.13

docker pull 10.10.200.11:5000/pause:3.9

docker pull 10.10.200.11:5000/etcd:3.5.9-0

docker pull 10.10.200.11:5000/coredns/coredns:v1.10.1

docker tag 10.10.200.11:5000/kube-apiserver:v1.28.13 registry.k8s.io/kube-apiserver:v1.28.13

docker tag 10.10.200.11:5000/kube-controller-manager:v1.28.13 \

registry.k8s.io/kube-controller-manager:v1.28.13

docker tag 10.10.200.11:5000/kube-scheduler:v1.28.13 registry.k8s.io/kube-scheduler:v1.28.13

docker tag 10.10.200.11:5000/kube-proxy:v1.28.13 registry.k8s.io/kube-proxy:v1.28.13

docker tag 10.10.200.11:5000/pause:3.9 registry.k8s.io/pause:3.9

docker tag 10.10.200.11:5000/etcd:3.5.9-0 registry.k8s.io/etcd:3.5.9-0

docker tag 10.10.200.11:5000/coredns/coredns:v1.10.1 registry.k8s.io/coredns/coredns:v1.10.1

docker rmi 10.10.200.11:5000/kube-apiserver:v1.28.13

docker rmi 10.10.200.11:5000/kube-controller-manager:v1.28.13

docker rmi 10.10.200.11:5000/kube-scheduler:v1.28.13

docker rmi 10.10.200.11:5000/kube-proxy:v1.28.13

docker rmi 10.10.200.11:5000/pause:3.9

docker rmi 10.10.200.11:5000/etcd:3.5.9-0

docker rmi 10.10.200.11:5000/coredns/coredns:v1.10.1

执行上面的批处理文件,如果个别网络原因出错,可以重复执行;完成之后能看到下面的结果。

1

2

3

4

5

6

7

8

9

10

|

# 注意在内网安装的时候,最好保证容器和 kubeadm config images list 看到的结果一致,比如下面结果:

[root@k8smaster1 bmc]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.k8s.io/kube-apiserver v1.28.13 5447bb21fa28 4 weeks ago 125MB

registry.k8s.io/kube-controller-manager v1.28.13 f1a0a396058d 4 weeks ago 121MB

registry.k8s.io/kube-proxy v1.28.13 31fde28e72a3 4 weeks ago 81.8MB

registry.k8s.io/kube-scheduler v1.28.13 a60f64c0f37d 4 weeks ago 59.3MB

registry.k8s.io/etcd 3.5.9-0 73deb9a3f702 16 months ago 294MB

registry.k8s.io/coredns/coredns v1.10.1 ead0a4a53df8 19 months ago 53.6MB

registry.k8s.io/pause 3.9 e6f181688397 23 months ago 744kB

|

接下来开始配置K8s Master服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

|

# 1. master 初始化操作

# 注意这里 10.244.0.0的IP最好先不改,后面要用到

kubeadm init --kubernetes-version=1.28.13 \

--apiserver-advertise-address=10.10.213.1 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket=unix:///var/run/cri-dockerd.sock \

--dry-run

# 谨慎使用下面的参数,可能出现意向不到错误

--image-repository=registry.aliyuncs.com/google_containers

K8s默认Registries地址是k8s.gcr.io或者registry.k8s.io,国内可能无法访问或速度很慢,可以自定义

-–pod-network-cidr=10.244.0.0/16

Pod网络插件。K8s支持多种Pod网络,比如flannel设置为 10.244.0.0/16,calico设置为192.168.0.0/16

# 新版k8s重置使用cri-dockerd

kubeadm reset --cri-socket=unix:///var/run/cri-dockerd.sock

# 可能涉及手动清理事项

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

ipvsadm --clear

rm -rf $HOME/.kube

rm -rf /var/lib/cni/*

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/*

# 2. 结果如下

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.213.1:6443 --token h465fy.g0ajtebxunf2wph0 --discovery-token-ca-cert-hash \

sha256:064d3ec233957c930d4e7618e9be3bfeb03d5337645c60f1412a5619421abcf9

# 3. 执行上面输出的3条命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 4. 一定要记住这几行信息,节点加入集群需要验证

kubeadm join ......

# 如果没记住,可以在master上执行下面这句,重新生成

kubeadm token create --print-join-command

# 还有个问题 token 默认有效期是24小时,失效后,node也是加不进来的。需要重新生成token

kubeadm token list # 查看当前有效的token

# 5. 配置kubetl认证信息

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

# 6. 查看一下集群pod,确认每个组件都处于 Running 状态

# 由于master节点还差一些配置,coredns暂时无法正常启动

[root@k8smaster1 home]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5dd5756b68-s2l2b 0/1 Pending 0 25m

coredns-5dd5756b68-wsjqk 0/1 Pending 0 25m

etcd-k8smaster1 1/1 Running 0 25m

kube-apiserver-k8smaster1 1/1 Running 0 25m

kube-controller-manager-k8smaster1 1/1 Running 0 25m

kube-proxy-fj7fx 1/1 Running 0 25m

kube-scheduler-k8smaster1 1/1 Running 0 25m

# 更详细:kubectl get pod --all-namespaces -o wide

# 查询某个pod的信息

kubectl describe pod coredns-5dd5756b68-s2l2b -n kube-system # 查看某个Pod详细

kubectl logs kube-flannel-ds-8x9f5 -n kube-flannel # 查看日志

# 7. 此时主节点状态

[root@k8smaster1 home]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster1 NotReady control-plane 25m v1.28.2

# 8. 配置flannel网络

mkdir -p /root/k8s/

cd /root/k8s

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 此yml中的"Network": "10.244.0.0/16" 要和 kubeadm init 中 --pod-network-cidr 一样;

# 否则可能会使得Node间 Cluster IP 不通。

# 查看需要下载的镜像

cat kube-flannel.yml |grep image|uniq

# 考虑用镜像代理服务器,比如:docker.m.daocloud.io

docker pull docker.m.daocloud.io/flannel/flannel:v0.25.6

docker pull docker.m.daocloud.io/flannel/flannel-cni-plugin:v1.5.1-flannel2

# 部署插件

kubectl apply -f kube-flannel.yml

# PS:卸载插件用 kubectl delete -f kube-flannel.yml !!!不要轻易用,可能删除不干净。

# +++++

# 或者采用calico网络方案:

wget https://docs.projectcalico.org/v3.25/manifests/calico.yaml --no-check-certificate

需要修改里面定义Pod网络(CALICO_IPV4POOL_CIDR),与前面kubeadm init的--pod-network-cidr指定的一样

# +++++

# 此时可能出现,提示找不到/run/flannel/subnet.env文件

kube-system coredns-5dd5756b68-s2l2b 0/1 ContainerCreating 0 20h

kube-system coredns-5dd5756b68-wsjqk 0/1 ContainerCreating 0 20h

# 手工添加文件

vi /run/flannel/subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

# 9. 系统开始自己启动服务,稍等片刻,再查询主节点状态

[root@k8smaster11 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster11 Ready master 35m v1.19.4

# 下面的结果才算正常

[root@k8smaster11 ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-f9fd979d6-kcvlg 1/1 Running 1 19h

kube-system coredns-f9fd979d6-nwj2h 1/1 Running 1 19h

kube-system etcd-k8smaster11 1/1 Running 1 19h

kube-system kube-apiserver-k8smaster11 1/1 Running 2 19h

kube-system kube-controller-manager-k8smaster11 1/1 Running 0 91m

kube-system kube-flannel-ds-crv75 1/1 Running 1 18h

kube-system kube-proxy-kr5t8 1/1 Running 2 19h

kube-system kube-scheduler-k8smaster11 1/1 Running 1 90m

# 10. 试一试设置命令补全(Worker机器也适用)

yum install -y bash-completion

source <(kubectl completion bash) # 重启失效

echo "source <(kubectl completion bash)" >> ~/.bashrc # 重启生效

source ~/.bashrc

|

可能会出现下面这种警告,附上解决办法。

1

2

3

4

5

6

7

8

9

10

11

|

# 查询集群健康状态

kubectl get cs

# scheduler Unhealthy

# controller-manager Unhealthy

# 这是/etc/kubernetes/manifests下的kube-controller-manager.yaml和kube-scheduler.yaml设置的默认端口是0

# - --port=0

# 注释掉就可以了,之后三台机器都重启kubelet

systemctl status kubelet.service

systemctl restart kubelet.service

# 参考:https://llovewxm1314.blog.csdn.net/article/details/108458197

|

3. Worker Node配置

3.1 Worker上也要拉取几个镜像

docker pull 10.10.200.11:5000/kube-proxy:v1.28.13

docker pull 10.10.200.11:5000/pause:3.9

docker tag 10.10.200.11:5000/kube-proxy:v1.28.13 registry.k8s.io/kube-proxy:v1.28.13

docker tag 10.10.200.11:5000/pause:3.9 registry.k8s.io/pause:3.9

docker rmi 10.10.200.11:5000/kube-proxy:v1.28.13

docker rmi 10.10.200.11:5000/pause:3.9

docker pull 10.10.200.11:5000/flannel/flannel:v0.25.6

docker pull 10.10.200.11:5000/flannel/flannel-cni-plugin:v1.5.1-flannel2

3.2 Worker节点同步配置文件并支持K8s命令

1

2

3

4

5

6

|

# 1. copy admin.conf文件 到 workder 节点

scp -P 22 /etc/kubernetes/admin.conf root@10.10.xx.xx:/etc/kubernetes

# 2. 设置环境变量

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

|

3.3 注册新节点

1

2

3

4

5

6

7

8

9

10

11

|

# 当前Worker加入集群

kubeadm join 10.10.213.1:6443 --token h465fy.g0ajtebxunf2wph0 --discovery-token-ca-cert-hash \

sha256:064d3ec233957c930d4e7618e9be3bfeb03d5337645c60f1412a5619421abcf9

# 在Master中检查集群的状态:

kubectl cluster-info

kubectl get cs

kubectl get pod --all-namespaces

kubectl get nodes

kubectl get service

kubectl get serviceaccounts

|

3.4 删除节点

依次在Master和Worker服务器上执行下面几条,然后重新走 3.1的注册流程

1

2

3

4

5

6

7

8

|

# [root@k8smaster11 ~]#

kubectl delete nodes k8snode13

# [root@k8snode13 ~]#

docker ps -qa | xargs docker rm -f

rm -rf /etc/kubernetes/kubelet.conf

systemctl restart docker.service kubelet.service

rm -rf /etc/kubernetes/pki/ca.crt

|

4. 问题汇总

如何重置Master

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

# 重置Master上的k8s,重新从 init 开始,这种没有清理干净一般会遇到很多问题,谨慎使用

kubeadm reset

# 新版用了cri-dockerd

kubeadm reset --cri-socket=unix:///var/run/cri-dockerd.sock

# 可能涉及手动清理事项

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

ipvsadm --clear

rm -rf $HOME/.kube

rm -rf /var/lib/cni/*

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/*

# 甚至后面可以将K8s基准组件都删除掉,从头再来

sudo yum rm kubectl kubelet kubeadm

sudo snap remove kubectl kubelet kubeadm

|

Worker加入新的Master

1

2

3

4

5

6

7

|

# 已经使用过的Workder节点加入新的Master,可能会遇到无法加入报错的问题:

# 解决办法就是重置。重置Worker节点比较容易,一般不会出问题。

kubeadm reset --cri-socket=unix:///var/run/cri-dockerd.sock

# 再清除和重启

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

systemctl daemon-reload

systemctl restart kubelet

|

Master上显示worker的角色名称,通过Label标记:

kubectl label nodes k8snode13 node-role.kubernetes.io/worker=node13 --overwrite

kubectl label nodes k8snode13 node-role.kubernetes.io/worker- # 删除

5. 简单示例

Pod是K8s中你可以创建和部署的最小也是最简的单位。Pod中封装着应用的容器(也可以是好几个容器)、存储、独立的网络IP,管理容器如何运行的策略选项。

5.1 一个Pod中运行一个容器

下面我们在刚配置好的K8s集群中,创建一个nginx容器nginx-svc.yaml。

1

2

3

4

5

6

7

8

9

10

11

12

|

apiVersion: v1

kind: Pod

metadata:

name: nginx-test

labels:

app: web

spec:

containers:

- name: front-end

image: nginx:1.7.9

ports:

- containerPort: 80

|

运行命令

1

2

3

4

|

kubectl create -f ./nginx-svc.yaml # 创建Pod

kubectl get po # 查看Pod

kubectl describe po nginx-test # 查看Pod详细情况

kubectl exec -it nginx-test /bin/bash # 进入到Pod(容器)内部

|

5.1 一个Pod中运行多个容器

配置文件nginx-redis-svc.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

apiVersion: v1

kind: Pod

metadata:

name: rss-site

labels:

app: rss-web

spec:

containers:

- name: front-nginx

image: nginx:1.7.9

ports:

- containerPort: 80

- name: rss-reader

image: redis

ports:

- containerPort: 88

|

运行命令

1

2

3

4

5

|

kubectl create -f ./nginx-redis-svc.yaml

kubectl get po

kubectl describe po rss-site

kubectl exec -it rss-site -c front-nginx /bin/bash

kubectl exec -it rss-site -c rss-reader /bin/bash

|

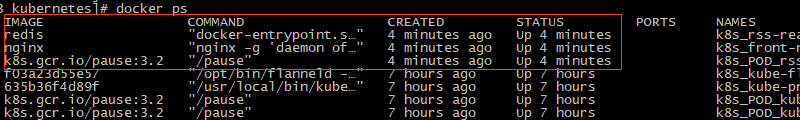

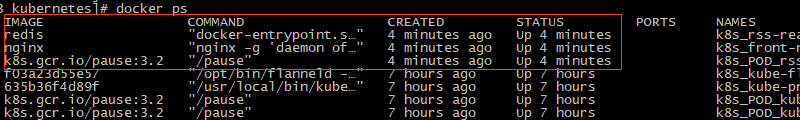

这里重点说一下,一个Pod中有两个容器,他们公用同一个根pause:

6. 命令行简单示例

可以不用通过编排文件,而是通过命令行的方式做一个简单的应用示例,参考如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

# 1. 指定Namespaces

kubectl create namespace web-nginx

kubectl delete namespaces web-nginx

# 2. 创建Deployment

kubectl create deployment deploy-nginx -n web-nginx --image 10.10.200.11:5000/nginx:alpine \

--replicas=3

# -n 指定namespaces

# --images 指定镜像

# --replicas 指定副本数量

# 可以查询拉起的pods

kubectl get pods -n web-nginx -o wide

kubectl delete deployments.apps deploy-nginx -n web-nginx

# 3. 创建Service来对外暴露应用

kubectl create service nodeport deploy-nginx --tcp=80:80 -n web-nginx

# NodePort 是一种 Service 类型,允许你通过集群节点上的特定端口从外部访问服务。

# nodeport 后面要写 deployment-name,否则无法访问

# 此时可以看到内部集群IP -> 10.102.247.136:80 映射到了master主机的32042端口

# 内部可以通过 10.102.247.136:80 访问,外部可以通过 master-ip:32042 端口访问

[root@k8smaster1 bmc]# kubectl get service -n web-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deploy-nginx NodePort 10.102.247.136 <none> 80:32042/TCP 6h10m

# 输出编排文件

kubectl get service deploy-nginx -n web-nginx -o yaml

kubectl delete service deploy-nginx -n web-nginx

# 查看Pod的标签

kubectl get pods -n web-nginx --show-labels

# 4. 验证集群负载均衡方式(这里的确是 -- sh)

# 在负载的每台节点机上执行下面的命令,将本机hostname写入html文件,之后刷新页面看负载均衡算法效果

kubectl exec -it <pod-name> -n web-nginx -- sh -c \

"echo $(hostname) > /usr/share/nginx/html/index.html"

# 或者直接每台节点机进入docker修改相关文件

docker exec -it k8s_nginx_xxx sh

vi /usr/share/nginx/html/index.html

# 5. 修改副本数量

kubectl scale deployment <deployment-name> --replicas=0 -n web-nginx

# 可以体验关闭节点服务器,deployment的整体响应策略。

kubectl get deployments.apps --all-namespaces

# 参考阅读

https://blog.csdn.net/m0_51277041/article/details/139701598

|

参考:

https://www.cnblogs.com/shunzi115/p/18010142

https://www.cnblogs.com/qiuhom-1874/p/17279199.html

https://blog.csdn.net/weixin_58376680/article/details/138875835

配置镜像仓库,访问docker.io上的镜像

https://blog.csdn.net/qq_46274911/article/details/138486623

(完)